For decades, the U.S. healthcare system has been weighed down by frontline worker shortages, waste and inefficiencies. Organizations have struggled to tap into the massive volume of unstructured data that lives in silos and engage effectively with their most important stakeholders – patients.

Generative artificial intelligence is poised to transform healthcare by enabling solutions to effectively address these challenges. This cutting-edge technology opens new avenues for innovation in diagnosis, treatment planning, operations efficiency and overall healthcare delivery – and industry leaders are taking notice. A recent survey revealed that 25% of healthcare organizations implemented a generative AI solution in 2023, while 58% plan to adopt one in 2024. However, the generative AI-driven transformation won’t – and shouldn’t – happen overnight.

Unlike its rule-based predecessor, the real value of generative AI stems from the ability to produce varied generative outputs. While this flexibility is advantageous, the technology inherently presents potential risks, particularly in understanding why specific outputs are generated, where training data came from, potential bias in the models, and other “black box” issues. The lack of transparency raises red flags around clinical validation and reliability, regulatory compliance and disparities in healthcare delivery, which could ultimately erode trust among all stakeholders.

Does this mean organizations should sit on the sidelines? Definitely not. They need to start piloting now. Some are already using generative AI solutions to scribe clinician notes, draft responses for communicating with patients based on EHR integrations, and easily access patient information at the point of care. Others should follow this lead and embrace a phased implementation that considers the value, readiness and risk of integrating the technology across different use cases.

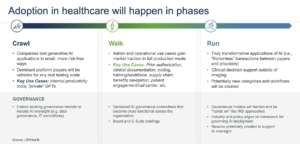

Quick wins will be achieved in non-threatening areas, while the complexity of healthcare and the need for responsible implementation will extend the timeline in riskier areas. Healthcare stakeholders can mitigate the risks and maximize return on investment with a crawl, walk, run approach that includes appropriate governance models each step of the way as risk increases. This strategy is important for health system executives to consider because just 16% currently have an AI governance policy in place. Thoughtful progression on implementation and governance will be an imperative over the next decade.

Crawl phase: Much like a pilot, organizations test generative AI applications on a small scale with use cases that present minimal risk, such as internal productivity tools, and can be deployed in a risk-free way while embedding know-how and culture around new technology. A majority of use cases to date have been in this phase.

Walk phase: Administrative and operational uses, such as prior authorization, clinical documentation, patient engagement and member services, gain traction. Organizations need to consider how they can put generative AI-driven solutions for uses cases with moderate risk into full production mode. The industry is starting to move into this phase.

Run phase: This is where the true transformation happens, and the industry will begin to see substantial ROI. Use case examples include clinical decision support, care planning, and “frictionless” transactions between payers and providers. When reaching the run phase, organizations may be analyzing potential new categories and workflows to which generative AI can be applied, not just applying the technology to existing workflows. Nobody’s here yet – everyone is still learning how to crawl and walk.

As healthcare organizations hit these milestones, governance will play a critical role in managing the risks associated with generative AI. During the pilot phase, stakeholders may choose to simply extend the purview of existing governance models (such as data governance and IT committees) to include AI oversight. As adoption of AI moves from pilot projects to broader implementation, governance models will need to evolve to incorporate clinical input and cross-functional teams. Dedicated AI governance committees should be set up with responsibility for organizational-wide AI strategy and deployment. They should have accountability at the board level and the C-Suite given the importance and potential risks associated with AI.

AI companies that are selling into these organizations should be prepared for this enhanced oversight. In the run phase, we will see hardened approaches that may begin to look like Independent Review Boards. Maybe by this point the industry and policymakers will have aligned on a mutually agreed upon AI governance framework that will create more clarity for AI developers and users.

We are already heading in that direction. The Biden administration issued an executive order on AI in October 2023 to promote “safe, secure and trustworthy development and use of artificial intelligence.” In December, a community of researchers, developers and organizational leaders launched the AI Alliance to develop AI responsibly.

As we embark on this transformative journey, generative AI has enormous potential to reshape the healthcare landscape–addressing what have been insurmountable obstacles until now. We’re seeing progress already, but it’ll be a decade before we realize the true potential.